MENLO PARK, California--Meta Platforms used public Facebook and Instagram posts to train its new Meta AI virtual assistant, but excluded private posts shared only with family and friends in an effort to respect consumers' privacy, the company's top policy executive told Reuters in an interview.

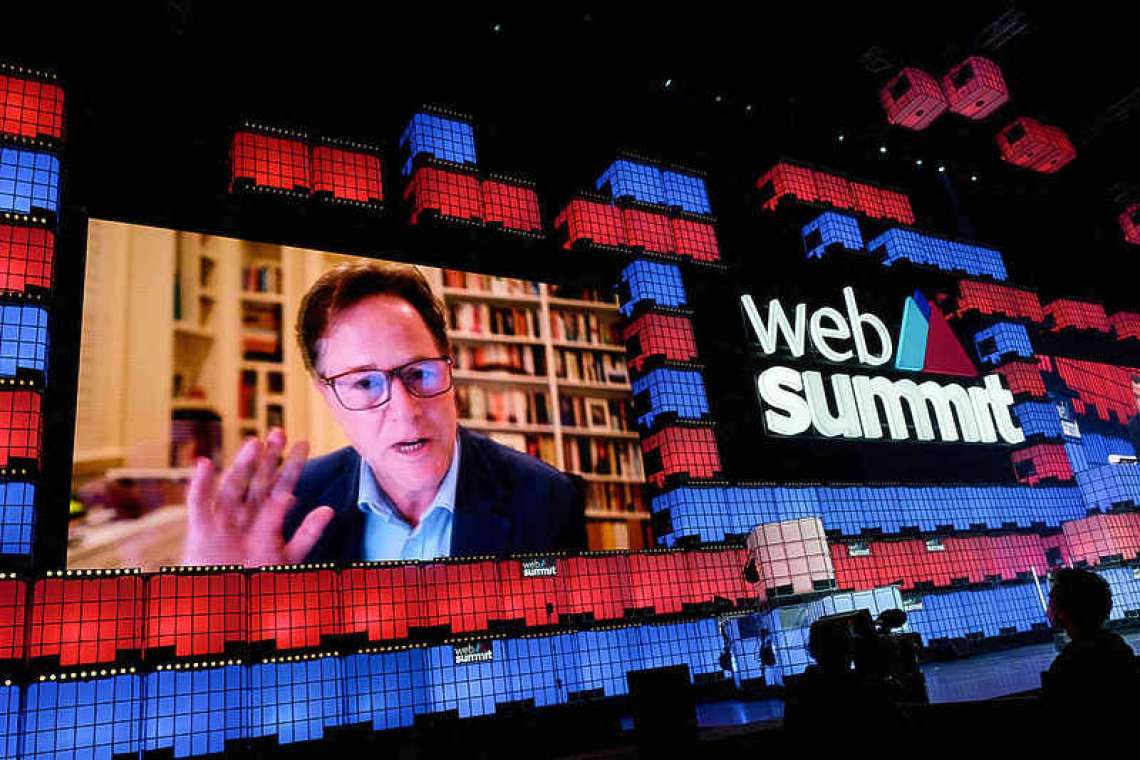

Meta also did not use private chats on its messaging services as training data for the model and took steps to filter private details from public datasets used for training, said Meta President of Global Affairs Nick Clegg, speaking on the sidelines of the company's annual Connect conference this week. "We've tried to exclude datasets that have a heavy preponderance of personal information," Clegg said, adding that the "vast majority" of the data used by Meta for training was publicly available. He cited LinkedIn as an example of a website whose content Meta deliberately chose not to use because of privacy concerns. Clegg's comments come as tech companies including Meta, OpenAI and Alphabet's Google have been criticized for using information scraped from the internet without permission to train their AI models, which ingest massive amounts of data in order to summarize information and generate imagery.

The companies are weighing how to handle the private or copyrighted materials vacuumed up in that process that their AI systems may reproduce, while facing lawsuits from authors accusing them of infringing copyrights. Meta AI was the most significant product among the company's first consumer-facing AI tools unveiled by CEO Mark Zuckerberg on Wednesday at Meta's annual products conference, Connect. This year's event was dominated by talk of artificial intelligence, unlike past conferences which focused on augmented and virtual reality. Meta made the assistant using a custom model based on the powerful Llama 2 large language model that the company released for public commercial use in July, the company said. It will be able to generate text, audio and imagery and will have access to real-time information via a partnership with Microsoft's Bing search engine. The public Facebook and Instagram posts that were used to train Meta AI included both text and photos, Clegg said. He said Meta also imposed safety restrictions on what content the tool could generate, like a ban on the creation of photo-realistic images of public figures. On copyrighted materials, Clegg said he was expecting a "fair amount of litigation" over the matter of "whether creative content is covered or not by existing fair use doctrine," which permits the limited use of protected works for purposes such as commentary, research and parody.

"We think it is, but I strongly suspect that's going to play out in litigation," Clegg said. Some companies with image-generation tools facilitate the reproduction of iconic characters like Mickey Mouse, while others have paid for the materials or deliberately avoided including them in training data. OpenAI, for instance, signed a six-year deal with content provider Shutterstock this summer to use the company's image, video and music libraries for training. Asked whether Meta had taken any such steps to avoid the reproduction of copyrighted imagery, a Meta spokesperson pointed to new terms of service barring users from generating content that violates privacy and intellectual property rights.